Archive for the ‘BI’ Category

>Statistics are hugely underused. Even when they are used, they are improperly used. Much of the bolstering of weak arguments, miscommunication and ad-hoc ideology creation performed by individuals and organisations from the industrial revolution onwards are down to poor statistical use.

Entire industries are devoted to generating statistics; duplicating monstrous amounts of effort and when they are referred to e.g. in sales pitches, organisational reports, building/product tolerances, war crimes tribunals, political and environmental manifestos, news articles and business plans – they are so heavily caveated as to make them insensible. This is by contrast to the relatively deterministic, transparent and auditable approach organisations use to produce BI to support their own business decisions. Coupling this BI with governmental statistics is often necessary for the best decision making support, so commercial BI is itself hamstrung by statistics.

There are two key reasons for this:

1) Statistics are hard to find. If you are looking for, for example, the number of people that work in London currently (a simple enough request that many service organisations would need to be aware of), you will find this close to impossible. The UK Office for National Statistics (ONS) does not have this on their main site. Nor does the Greater London Authority or the newly released UK government linked data site. After you have wasted maybe twenty minutes of your time, you will be reduced to searching for “how many people work in London” then trawling through answers others have given when that same question has been asked. You will receive answers but many will be by small organisations or individuals that do not quote their sources. In the worst case, you may not even find these – instead using an unofficial figure for the whole of the UK which you have had to factor down to make sense just for London. If you search hard enough you will find what you are looking for at an ONS micro-site (completely different URL to ONS) but this data is over five years old.

There is also a bit of a myth that statistical interpretation is an art and that the general public can be confused – even sent entirely the wrong message by engaging in statistical understanding. Only statisticians can work with this data. Certainly there is this side to statistical analysis (basically anything involving probability, subsets, distributions and meta-statistics) but for the most part, both the general public and organisations are crying out for basic (the answer to one question without qualifiers e.g. where/if etc.) statistical information that is quite simply – on a web-site (we can all just about manage now thanks), produced by or sponsored by the Government (we need to have a basic trust level) with a creation date (we need to know if its old). If statistics are estimates, we need to know that and any proportions need to indicate the sample size. We need this since we are now sophisticated enough to know “8/10 owners said their cats preferred it” has less impact than if we are talking about a dataset of ten cats rather than 10K cats (we don’t really need this though since we’re capable of working out proportions ourselves).

We don’t want graphs since the scale can be manipulated. We don’t want averages since it is similarly open to abuse (mean, median or mode?). If we make a mistake and relate subsets incorrectly then the people that we are communicating to may identify this and that in itself becomes part of the informational mix (perhaps we were ill-prepared and they should treat everything else we say with care). We actually don’t need sophisticated Natural Language Processing (NLP), BI or Semantic Web techniques to do this. It would be nice if it were linked data but concentrate on sourcing it first. We really are not that bothered about accuracy either (since its unlikely we are budgeting or running up accounts on governmental statistics).

Mostly we are making decisions on this information and we are happy rounding to the nearest ten percent. Are we against further immigration? Is there enough footfall traffic to open a flower shop? Do renters prefer furnished or unfurnished properties in London? Which party has the record for the least taxation? What are the major industries for a given area? We just need all the governmental data to be gathered and kept current (on at least a yearly basis) on one site with a moderately well thought-out Query By Example (QBE)-based interface. That’s it.

Reading and writing have been fundamental human rights in developed countries for decades. Broadband Internet access is fast becoming one too. Surely we need to see access to consistent, underwritten government statistics in this vein too. Where other political parties dispute the figures, they should be able to launch an inquiry into them. Too many inquiries will themselves become a statistic – open to interpretation. It is absolutely in the interest of organisations and current affair-aware individuals.

>Comparing actual figures against targets are fundamental to any Performance Management (PM) system. PM solutions are typically built upon cubes and correspondingly, cubes are built upon data marts. In a data mart, measurements are generally stored in fact tables at their lowest level e.g. Cost per Organisational Unit (OU) per Day. These measurements are then aggregated along dimension levels e.g. Cost per Area per Week is the sum of the daily site figures for that week and area. This solution works well with actual figures as they are almost always additive. Targets however can sometimes be independent e.g. Target Cost per OU per Week is not necessarily the sum of daily target costs for that week.

There may be legitimate business reasons for doing so e.g. cost is heavily market/EOS driven and needs to be targeted manually or, for whatever reason, targets cannot be decomposed into fully additive components. This means that the PM solution has to either store the data for all levels in the fact tables or not include targets in the data mart at all. This latter option creates its own issues though as many front-end BI/PM tools can only connect to a single data source at a time.

Design alternatives on how to store data for all levels in the fact tables are not well documented in BI/PM design literature. Options here are:

1) Single Fact Table. Storing all aggregates in the same fact table simplifies the data model but has the disadvantages of values being duplicated e.g. the year value is stored in every day record; the fact table will contain a large number of fields and the ETL process is more complex (either using updates or reloading every level for each load).

2) Multiple Measures. To reduce the number of fields in the fact table, the tables could be split along time levels or measure. This option has the advantages of a reduction in the number of measures per metric and no duplication along the Time dimension. Its disadvantages are that there is likely duplication along the OU dimension and there are multiple fact tables.

3) Fact Table per Dimension Level. Defining a table for each of the dimension levels has the advantages of no field duplication and the least number of measures overall. Its disadvantage is again that there are multiple fact tables.

Option 3 is generally recommended as this eliminates data duplication and simplifies the ETL process. It is somewhat unusual as typically within a star schema; a fact table is surrounded by multiple dimension tables. It is however, a practical solution and has been recently implemented for a large resources client.

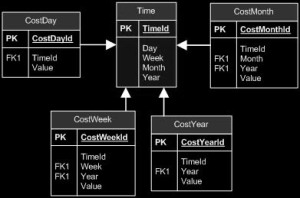

The fact tables are connected to the dimension tables on differing granularities as shown below:

The CostDay fact table is linked to the Time dimension table through its TimeId Foreign Key (FK). Fact tables with different granularities need to have additional field linking them to the Time dimension at the desired granularity. All attributes within the fact table therefore have to share the same granularity.

This (admittedly complex) model can be later simplified using whatever tools you use to maintain your cubes. SQL Server for example provides Perspectives, Measure Groups and Calculated Members that can be used to hide the complexity of the underlying data model from the user. Perspectives can be used to hide objects e.g. fact tables from the user. Finally Measure Groups can be used to create logical groupings of fact members.

>4) Directly address credit crunch sensibilities. BI endeavours can be expensive and challenging to articulate in terms of benefit. You may need to plan a BI strategy; but right now, projects need to have a critical-mass of internal support and short-term benefits in order to obtain funding and/or avoid being postponed. Key areas are:

a. Cash flow management. Cash is King. Cash flow metrics e.g. Price to Cash Flow/Free Cash Flow enable managers and potential buyers to see basically how much cash an organisation can generate. Data mining can predict cash flow problems e.g. bad debt to allow recovery and/or credit arrangements to be made in a timely (and typically cheaper) manner.

b. Business planning capabilities. While information systems are in place for many organisations to generate representative management information, the level of manual intervention needed to deliver essential reporting can result in unacceptable delay and therefore data latency and inconsistency. This directly impacts the organisations ability to predict and respond to threats to its operations (many – in an adverse climate). Outcomes can be financial penalties, operational inefficiencies and lower than desired customer satisfaction. Building habitual Performance Management (PM) cycles of Monitoring (What happened? /what is happening?), Analysing (Why?) and Planning (What will happen? /what do I want to happen?) – places the focus back on results as well as affording a host of additional benefits.

5) Release something early. Unlike a couple years ago, you likely cannot now go through a six-month analysis and following data cleansing and integration phase. This work does not deliver tangible business benefits in the short-term. Instead, look at getting basic BI capabilities out within weeks. This will allow you to incrementally build upon your successes, gain business/operations experience (through monitoring usage) and build user alliances gradually. How much you can do here depends on your chosen BI platform but building your BI/PM on-top of existing reports (data pre-sourced/cleaned), selectively using in-memory analytics tools (no need for ETL) and SaaS BI; if your immediate needs are relatively modest, all should be seriously considered.

6) Avoid the metadata conundrum. Metadata is undoubtedly important. It is well known to assure adoption; convincing those making decisions (from the system) that they are using the best data available BUT it is a complex problem and intersects other disciplines e.g. data integration, information management and search. Most data objects, whether Word files, Excel files, blog postings, tweets, XML, relational databases, text files, HTML files, registry files, LDAPs, Outlook etc. can be expressed relationally i.e. they make at least some sense in a tabular format. They also span the spectrum of metadata complexity. The end-game is to build on all the ideas of ODBC and JDBC to provide the same logical interface to all of them. A DBMS or file system can then treat them all the same logical way, as linked databases and extract the metadata, create the entities and relationships in the same way and use the same syntax to interrogate, create, read, write and update them. Tools/theories are evolving but this is rarely achievable in practice. If you can satisfy regulatory requirements with the bare minimum – do it. Just concentrate on data lineage if you cannot; building as much of a story around the ETL process as possible. Less than 15% of BI users use metadata extensively anyway.

>Planning a BI strategy for your organisation can be challenging; you need decent industry/vendor awareness, an appreciation of organisational data and ideally; a handle on budgeting. In no particular order, here are some practicable tips to get started with yours. More will be forthcoming.

1) Start with your Supply Chain. Reduction of energy use in data centres is a key IT issue. This is generally driven by either a cost saving drive or a Green IT focus (shouldn’t they really be the same thing though?). However, most organizations budget between 2-5% of revenue for IT budgets, yet spend roughly 50% of revenue on all aspects of supply chain management. Basically, there are significantly more savings to be made in the supply chain than in data centres. Large volumes of raw data are generated and stored by each process of the supply chain (plan, source, make, deliver and return) by automated enterprise applications being used at most large, global manufacturers. BI can help determine what information is necessary to drive improvements and efficiencies at each process in the supply chain and turn the raw data into meaningful metrics and KPIs.

2) Forget the BI-Search “evolution”. Just the training costs for commercial BI systems are expensive. Organisations want single (easy and simple) interfaces wherever possible and a search-based interface appears to be the key to engaging the masses. There has been heavy speculation over the last couple years (ongoing) that BI and Search technologies will somehow merge. This approach however only really surfaces existing BI reports for more detailed interrogation e.g. “July Sales Peaks”. A search string is not a rich enough interface to support ad-hoc queries. Think about it? You need either a devoted language e.g. MDX or a rich data visualisation package to traverse dimensional data. A search box will never explore correlation between marketing budget and operating income.

3) Forget “BI for the masses” (for now). BI has been actively used in the enterprise since the early nineties. The expectation of “BI for the masses” (basically – the SMB market) hasn’t exactly happened. Why is this? It’s because people want to collaborate and jointly come up with ideas, solutions, figures and approaches. They need this for personal, political and commercial reasons. It will change when enterprise SOA is in place and it might change when there is a change in the way consumer impacts enterprise networking. It will not change in the short-term.

>(Originally posted 7 March 2009).

Received wisdom tells us that unstructured information is 80% of the data in an organisation. Reporting, BI and PM systems are still tied, in the main, to structured information in transactional systems (obtained through ETL, staging, dimensional modelling, what-if modelling and data mining). An opportunity has existed for some time to incorporate unstructured data. The inhibitor is technology. Where product sales figures can be extracted over years and then extrapolated to determine likely sales next period; how does a BI solution use the dozens of sales reports, emails, blogs, unstructured data embedded within database fields, call centre logs, reviews and correspondence describing the product as outmoded, expensive or unsafe? If they do not, they may lose out since this information (the 80%) can affect the decision of how much of the product to produce.

Most organisations currently handle the general need to unlock unstructured data by market sampling through techniques of interviewing, questionnaires and group discussion. They attempt to apply structure to the data by categorizing it e.g. “On a scale of one to ten – how satisfied are you with this product?” They either ignore information already there or manually transpose it; typically by outsourcing. A minority use the only technology that can truly unlock unstructured data within the enterprise right now – text mining. Note that this is different to both Sentiment Analysis (too interpretive right now) and the Semantic Web (too much data integration required right now) .

Many of these organisations however believe text mining simply makes information easier to find. This is a function of currently available products. The principal MSFT text mining capability is in its high-end search platform Fast. Such products use text mining techniques to cluster related unstructured content. It is not enough to loosely link data however, they need to be linked at an entity (ERM) level so they are subject to identical policies of governance, accountability and crucially; the same decision making criteria. It is worth stating the pedestrian; text mining is like data mining (except it’s for text!); establishing relationships between content and linking this information together to produce new content; in this case new data (whereas data mining produces new information).

Consider a scenario where an order management application retrieves all orders for a customer. At the same time, search technologies return all policy documents relating to that customer segment together with scanned correspondence stating that dozens of the orders were returned due to defects. The user now has to perform a series of manual steps; read and understand the policy documents, determine which returned order fields identify policy adherence, check the returned orders against these fields, read and understand the correspondence, make a list of all orders that were returned (perhaps by logging them in a spreadsheet), calculate the client value by subtracting their value from the value displayed in the application and finally modify their behaviour to the customer based upon their value to the organisation. Human error at any step can adversely affect customer value and experience. Much better to build and propagate an ERM on-the-fly (by establishing “Policy” as a new entity with a relationship to “Customer Segment”), grouping the orders as they are displayed while at the same time removing returned orders. I’m not aware of any organisations currently working in this way. This is the technology inhibitor and where text mining needs to go next.

>(Originally posted 28 January 2009).

PPS has been reshuffled (http://blogs.msdn.com/sharepoint/archive/2009/01/23/microsoft-business-intelligence-strategy-update-and-sharepoint.aspx). PPS has been a successful MSFT technology. It has taken MSFT from a standing start to a player in the competitive PM market, to the point where Gartner’s last Magic Quadrant for PM showed MSFT in the Visionary category. As with Content Management Server previously, MSFT will consolidate PPS Monitoring and Analytics into their flagship platform MOSS from mid 2009. PPS Planning will be withdrawn. The core MSFT BI stack remains unchanged.

Most customers will actually benefit from this development. Customers that want PPS Monitoring and Analytics (the bulk of those that have any interest in PPS) should see a reduction in licensing costs: a MOSS Enterprise CAL is cheaper than a PPS CAL. Many customers will already own it.

Why did this happen though?

The target market for PPS Planning (in its current form) is saturated. Most organizations that need end-to-end PM already have solutions. MSFT had only a modest share of the PM application market. SAP, IBM and Oracle between them took around half. The market was also fragmented at the SME end with around half again being smaller vendors often based upon the MSFT BI stack e.g. Calumo.

Customers in newer PM sectors e.g. Insurance/Legal and the SME market were gradually beginning to adopt PPS Planning; but working with PPS models was arguably more of a technical activity than they were used to. PPS Planning lacked web-based data entry. The Excel add-in could be slow when connecting to PPS from Excel or publishing drafts. PPS was well served with complex financial consolidation options but this is a niche activity (MSFT make revenue from CAL licenses after all). MSFT needed to democratize the PM market but this needed associated change in business practice with planning activities becoming both decentralized and collaborative. It was unlike the other products in the MSFT BI suite and ultimately did not drive forward the core MSFT proposition of “BI for the masses” (http://www.microsoft.com/presspass/features/2009/jan09/01-27KurtDelbeneQA.mspx).

Finally, there’s maybe an alliance angle. MSFT jointly launched Duet with SAP, allowing easy interaction with SAP and MSFT Office environments (esp. Excel and Outlook). There could possibly be a strategic direction here to add financial planning and consolidation to a future release of Duet (or some future combination of Duet, Gemini and/or Dynamics). This would tightly integrate MSFT and SAP at a PM level. What would be in this for SAP is immediately unclear given their acquisition of OutlookSoft (and incorporation into their own product suite as SAP Business Planning & Consolidation [BPC]) but there has been a significant rise in the last year of existing SAP customers wanting to bolt-on PPS/SSRS onto their SAP deployments; basically because it is difficult for in-house resources to extract the data from SAP and present it themselves or because quotes from SIs are routinely in the tens of thousands of dollars per report. Partnering with MSFT here and using PPS Monitoring and Analytics from within MOSS may improve SAP client satisfaction around data access. MSFT would similarly benefit from increased enterprise access.